Python3机器学习入门(二)

k近邻算法——基础的分类算法

-

这个算法的好处是比较简单,分类识别效果较好

-

假设现在有两组数据

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19raw_data_X = [[3.39,2.33], #横坐标为肿瘤大小,纵坐标为时间

[3.11,1.78],

[1.34,3.36],

[3.58,4.68],

[2.28,2.87],

[7.42,4.69],

[5.74,3.53],

[9.17,2.51],

[7.79,3.42],

[7.93,0.79]]

raw_data_y = [0,0,0,0,0,1,1,1,1,1] #0为良性肿瘤,1为恶性肿瘤

#接收这两组数据,并绘散点图

x_train = np.array(raw_data_X)

y_train = np.array(raw_data_y)

plt.scatter(x_train[y_train==0,0],x_train[y_train==0,1],color="g")

plt.scatter(x_train[y_train==1,0],x_train[y_train==1,1],color="r")

plt.show()

-

先假设有一个新的数据,要判断其是不是恶性肿瘤

1

2

3

4

5

6

7x = np.array([8.09, 3.36]) #新的肿瘤数据

#将上面新的数据加入散点图中观察

plt.scatter(x_train[y_train==0,0],x_train[y_train==0,1],color="g")

plt.scatter(x_train[y_train==1,0],x_train[y_train==1,1],color="r")

plt.scatter(x[0],x[1],color="b")

plt.show()

-

很明显,新增的蓝色点属于恶性肿瘤,怎么通过k近邻算法得到呢?

-

即:新增点与哪k个点最近,用欧拉公式简单得到

1

2

3

4

5

6

7

8

9

10

11

12

13from math import sqrt

distances = [sqrt(np.sum((x_train-x)**2)) for x_train in x_train]

#现在新增点与所有点的距离都保存在distances数组

nearest = np.argsort(distances) #将点用索引排序

k = 6 #假设k=6

topK_y = [y_train[i] for i in nearest[:k]] #获得前k个点对应的肿瘤分类

from collections import Counter

votes = Counter(topK_y) #统计票数 这里结果为 Counter({1: 5, 0: 1})

votes.most_common(1) #获得前1个票数最多的[(key,value)]

predict_y = votes.most_common(1)[0][0] #取得key,即肿瘤分类

#结果为1,即新增点被预测为恶性肿瘤 -

调用sckil-learn中的kNN方法

1

2

3

4

5

6from sklearn.neighbors import KNeighborsClassifier

kNN_classifier = KNeighborsClassifier(n_neighbors=6) #传入k值

kNN_classifier.fit(x_train,y_train)

X_predict = x.reshape(1,-1)

y_predict = kNN_classifier.predict(X_predict) #要传入一个二维数组

y_predict[0] #获得结果 -

外部写一个高仿版sckil-learn的kNN算法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33class KNNClassifier:

def __init__(self, k):

assert k >=1, "k must be valid"

self.k = k

self._X_train = None

self._y_train = None

def fit(self, X_train, y_train):

self._X_train = X_train

self._y_train = y_train

return self

def predict(self, X_predict):

y_predict = [self._predict(x) for x in X_predict]

return np.array(y_predict)

def _predict(self, x):

distances = [sqrt(np.sum((x_train - x) ** 2)) for x_train in self._X_train]

nearest = np.argsort(distances)

topK_y = [self._y_train[i] for i in nearest[:self.k]]

votes = Counter(topK_y)

return votes.most_common(1)[0][0]

def __repr__(self):

return "kNN(k=%d)" % self.k

训练与测试数据集

-

取测试数据,两个关系矩阵要同时乱序

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27#乱序索引即可,自行实现一个train_test_split

import numpy as np

def train_test_split(X, y, test_radio=2, seed=None):

assert X.shape[0] == y.shape[0],\

"the size of X must be equal to the size of y"

assert 0.0 <= test_radio <= 1.0,\

"test_ration must be valid"

if seed:

np.random.seed(seed)

shuffled_indexes = np.random.permutation(len(X))

test_size = int(len(X)*test_radio)

test_indexes = shuffled_indexes[:test_size]

train_indexes = shuffled_indexes[test_size:]

X_train = X[train_indexes]

y_train = y[train_indexes]

X_test = X[test_indexes]

y_test = y[test_indexes]

return X_train, X_test, y_train, y_test; -

测试算法准确率

1

sum(y_predict == y_test)

-

使用sklearn中的train_test_split

1

2

3

4

5from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(x,y,test_size=0.2)

#这里0.2指的是前20%作为训练集,后80%作为测试集

#将获得的X_train和y_train作为模型,X_test放入写好的算法测试,得到y_predict与y_test比对正确率

超参数

-

超参数:运行机器学习前要指定的参数,例如kNN中的k

-

模型参数:算法过程中学习的参数

-

下面写一个 网格搜索法 寻找最好的k

-

要考虑平票问题,加上距离权重即可

1

2

3for method in ["uniform","distance"]:

for k in range(1,100):

knn_clf = KNeighborsClassifier(n_neighbors=k, weights=method) -

距离:欧拉距离、曼哈顿距离 拓展为 明可夫斯基距离(新的超参数p)

-

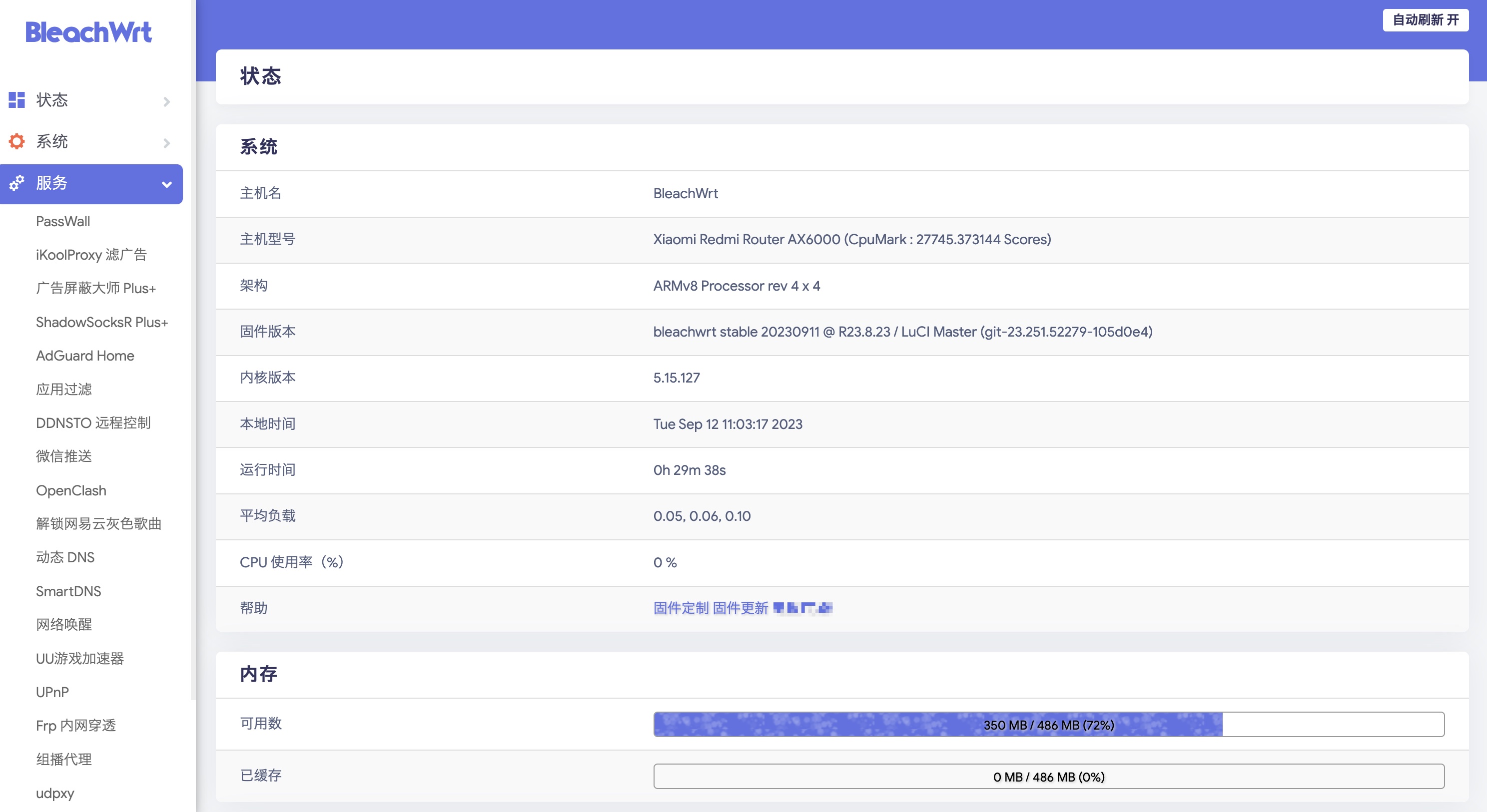

最后程序运行结果如下图(考虑了3个超参数:p / method / k)

-

更多距离定义

- 向量空间余弦相似度 Cosine Similarity

- 调整余弦相似度 Adjusted Cosine Similarity

- 皮尔森相关系数 Pearson Correlation Coefficient

- Jaccard相似系数 Jaccard Coefficient

网格搜索法 Grid Search

-

首先指定超参数取值范围

1

2

3

4

5

6

7

8

9

10

11param_grid = [

{

'weights':['uniform'],

'n_neighbors':[i for i in range(1,11)]

},

{

'weights':['distance'],

'n_neighbors':[i for i in range(1,11)]

'p':[i for i in range(1,6)]

}

] -

进行搜索

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15knn_clf = KNeighborsClassifier()

#CV是交叉验证的意思

from sklearn.model_selection import GridSearchCV

#n_jobs代表用计算机的核数,-1位全部

#verbose代表输出信息,数值越大越详细

grid_search = GridSearchCV(knn_clf, param_grid, n_jobs=-1, verbose=2)

grid_search.fit(X_train,y_train)

#最佳分类器

grid_search.best_estimator_

#分类器正确率

grid_search.best_score_

数据归一化

-

将所有数据映射到同一尺度中

-

最值归一化:把所有数据映射到0~1间,适用于有明显边界的情况

-

均值方差归一化:所有数据归一到均值为0方差为1的分布中,适用于数据分布没有明显边界 (S为方差)

-

使用StandardScaler

1

2

3

4

5

6

7

8

9

10

11

12from sklearn.preprocessing import StandardScaler

standardScaler = StandardScaler()

standardScaler.fit(X_train)

#得到每列的均值

standardScaler.mean_

#得到每列的方差

standardScaler.scale_

#用transform进行均值方差归一化

X_train = standardScaler.transform(X_train)

X_test_standard = standardScaler.transform(X_test) -

自行实现一个StandardScaler

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30import numpy as np

class StandardScaler:

def __init__(self):

self.mean_ = None

self.scale_ = None;

def fit(self, X):

"""根据训练数据集获得数据均值与方差"""

assert X.ndim == 2,"The dimension of X must be 2"

self.mean_ = np.array([np.mean(X[:,i]) for i in range(X.shape[1])])

self.scale_ = np.array([np.std(X[:, i]) for i in range(X.shape[1])])

return self

def transform(self,X):

"""根据X进行均值方差归一化"""

assert X.ndim == 2, "The dimension of X must be 2"

assert self.mean_ is not None and self.scale_ is not None, \

"must fit before transform!"

assert X.shape[1] == len(self.mean_), \

"The feature number of X must be equal to mean_ and std_"

resX = np.empty(shape=X.shape,dtype=float)

for col in range(X.shape[1]):

resX[:,col] = (X[:,col] - self.mean_[col]) / self.scale_[col]

return resX

线性回归法 Linear Regression

简单介紹

- 用于解决回归问题

- 思想简单,实现容易

- 有许多强大的非线性模型基础

- 结果具有较好的可解释性

- 样本只有一个,称为:简单线性回归

最小二乘法

-

对目标函数求导,获得极值点

-

推导过程如下

-

接下来根据上述推导结果实现线性回归

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48import numpy as np

class SimpleLinearRegressionOne:

def __init__(self):

"""初始化Simple Linear Regression函数"""

self.a_ = None

self.b_ = None

def fit(self, x_train, y_train):

"""根据训练数据集x_train,y_train训练Simple Linear Regression模型"""

assert x_train.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert len(x_train) == len(y_train),\

"the size of x_train must be equal to the size of y_train"

x_mean = np.mean(x_train)

y_mean = np.mean(y_train)

"""分子和分母"""

num = 0.0

d = 0.0

for x,y in zip(x_train, y_train):

num += (x - x_mean) * (y - y_mean)

d += (x - x_mean) ** 2

self.a_ = num/d

self.b_ = y_mean - self.a_ * x_mean

return self

def predict(self, x_predict):

"""预测数据集x_predict,返回x_predict得到的结果向量"""

assert x_predict.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert self.a_ is not None and self.b_ is not None,\

"must fit before predict"

return np.array([self._predict(x) for x in x_predict])

def _predict(self, x_single):

"""给定单个预测数x_single,返回预测结果"""

return self.a_ * x_single + self.b_

def __repr__(self):

return "SimpleLinearRegressionOne()" -

根据预测结果绘制图片,得到结果如下

向量化

-

在上一节用for循环实现了简单的线性回归,但性能较低,而用向量替代for循环进行计算将提高效率

1

2

3

4"""分子和分母"""

num = (x_train - x_mean).dot(y_train - y_mean)

d = (x_train - x_mean).dot(x_train - x_mean)

#dot方法相当于向量间点乘 -

for循环与向量化运算两种方法的性能比较如下 (reg2为向量化运算)

-

可以看出,向量化运算的方法极大提高了运行效率

衡量线性回归法

-

均方误差 MSE (Mean Squared Error)

-

均方根误差 RMSE (Root Mean Squared Error)

-

平均绝对误差 MAE (Mean Absolute Error)

注意:RMSE和MAE的量纲和测试数据是一致的,他们的区别:RMSE有放大样本差距的趋势,而MAE没有,通常来说让RMSE更小意义更大

-

使用scikit-learn中的MSE和MAE

1

2

3

4

5from sklearn.metrics import mean_squared_error

from sklearn.metrics import mean_absolute_error

#使用举例

mean_squared_error(y_test, y_predict)

最好的衡量线性回归指标

-

之前提到的几种衡量指标针对不同模型会有不同的值,比如预测房价误差可能是10000元,但预测学生分数误差是10分,这样无法去比较并评价其算法更适用于哪种环境。

-

R Squared衡量指标

- R²≤1

- R²越大越好,若预测模型不犯任何错误,R²=1(最大值)

- 当模型为基准模型,R²=0

- 若R² < 0,说明模型不如基准模型,因此可能数据不存在线性关系

-

上面的公式上下除以m,变为下列形式

-

简单代码实现

1

2#下列表达式计算出来即为R Squared的值

1 - mean_squared_error(y_test, y_predict) / np.var(y_test) -

使用sklearn中的方法

1

2from sklearn.metrics import r2_score

r2_score(y_test, y_predict)

多元线性回归和正规方程解

-

多元线性回归

-

正规方程解

时间复杂度 O(n³) 高,但不需要对数据做归一化处理

实现多元线性回归

-

Python代码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40import numpy as np

from .metrics import r2_score

class LinearRegression:

def __init__(self):

"""初始化 Linear Regression 模型"""

self.coef_ = None

self.interception_ = None

self._theta = None

def fit_normal(self, X_train, y_train):

"""根据训练数据集X_train, y_train训练Linear Regression模型"""

assert X_train.shape[0] == y_train.shape[0], \

"the size of X_train must be equal to the size of y_train"

X_b = np.hstack([np.ones((len(X_train),1)), X_train])

self._theta = np.linalg.inv(X_b.T.dot(X_b)).dot(X_b.T).dot(y_train);

self.interception_ = self._theta[0]

self.coef_ = self._theta[1:]

return self

def predict(self, X_predict):

"""给定待预测数据集X_predict,返回表示X_predict的结果向量"""

assert self.interception_ is not None and self.coef_ is not None, \

"must fit before predict!"

assert X_predict.shape[1] == len(self.coef_), \

"the feature number of X_predict must be equal to X_train"

X_b = np.hstack([np.ones((len(X_predict),1)), X_predict])

return X_b.dot(self._theta)

def score(self, X_test, y_test):

"""根据测试数据集X_test 和 y_test确定当前模型准确度"""

y_predict = self.predict(X_test)

return r2_score(y_test, y_predict)

def __init__(self):

return "LinearRegression()"

正在持续更新…最近更新:2020-09-21